ACLU calls out Amazon’s facial recognition tool

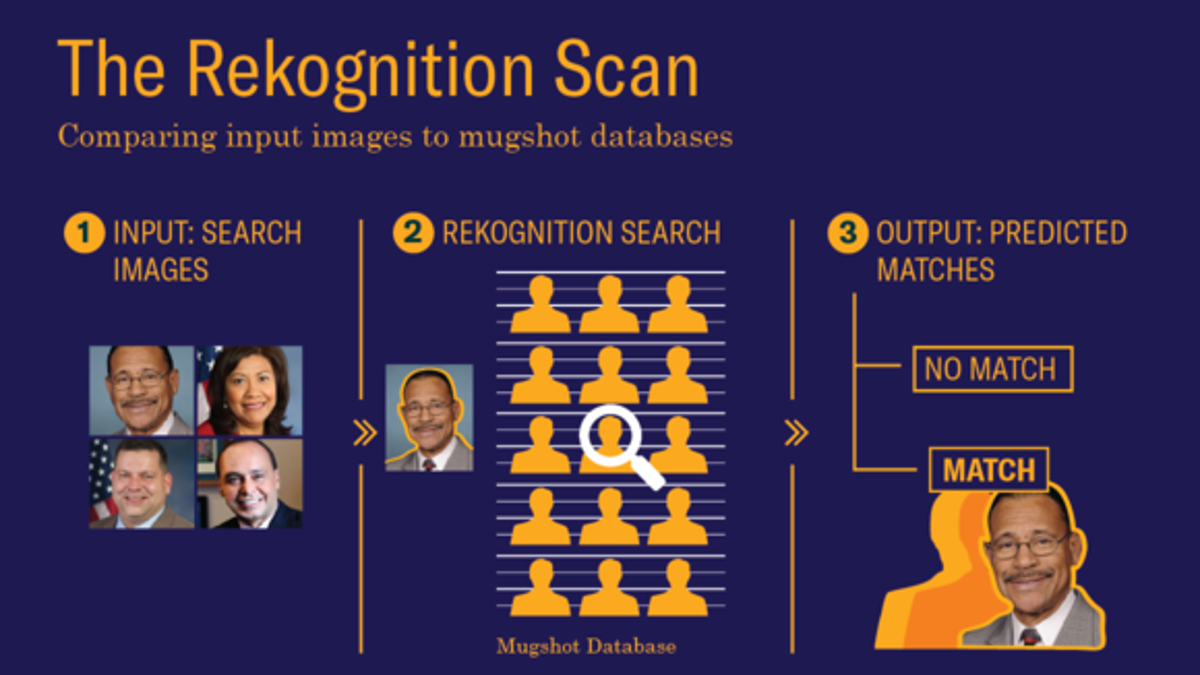

Why the American Civil Liberties Union is calling out Amazon’s facial recognition tool, and what the ACLU found when it compared photos of members of Congress to public arrest photos.

Amazon’s Rekognition facial surveillance technology has wrongly tagged 28 members of Congress as police suspects, according to ACLU research, which notes that nearly 40 percent of the lawmakers identified by the system are people of color.

In a blog post, Jacob Snow, technology and civil liberties attorney for the ACLU of Northern California, said that the false matches were made against a mugshot database. The matches were also disproportionately people of color, he said. These include six members of the Congressional Black Caucus, among them civil rights legend Rep. John Lewis, D-Ga.

The ACLU notes that people of color make up only 20 percent of Congress.

Snow also cited a recent letter to Amazon CEO Jeff Bezos by the Congressional Black Caucus, in which members voiced their concern about potential law enforcement use of Rekognition. “It is quite clear that communities of color are more heavily and aggressively policed than white communities,” the letter says. “This status quo results in an oversampling of data which, once used as inputs to an analytical framework leveraging artificial intelligence, could negatively impact outcomes in those oversampled communities.”

MICROSOFT CALLS FOR FACIAL RECOGNITION TECHNOLOGY RULES GIVEN 'POTENTIAL FOR ABUSE'

The ACLU echoes the concerns raised by the Congressional Black Caucus. “If law enforcement is using Amazon Rekognition, it’s not hard to imagine a police officer getting a ‘match’ indicating that a person has a previous concealed-weapon arrest, biasing the officer before an encounter even begins,” Snow writes. “Or an individual getting a knock on the door from law enforcement, and being questioned or having their home searched, based on a false identification.”

The false matches were made against a mugshot database (ACLU)

However, Amazon Web Services questioned how the ACLU conducted its test. “We think that the results could probably be improved by following best practices around setting the confidence thresholds (this is the percentage likelihood that Rekognition found a match) used in the test,” explained an AWS spokesperson, in a statement emailed to Fox News. “While 80 percent confidence is an acceptable threshold for photos of hot dogs, chairs, animals, or other social media use cases, it wouldn’t be appropriate for identifying individuals with a reasonable level of certainty. When using facial recognition for law enforcement activities, we guide customers to set a threshold of at least 95 percent or higher.”

The ACLU said that it used the default match settings that Amazon sets for Rekognition.

Amazon Web Services told Fox News that Rekognition is designed to efficiently trawl through vast quantities of image data. “It is worth noting that in real world scenarios, Amazon Rekognition is almost exclusively used to help narrow the field and allow humans to expeditiously review and consider options using their judgement (and not to make fully autonomous decisions), where it can help find lost children, restrict human trafficking, or prevent crimes,” explained the spokesperson.

SCHOOLS, FEARING THREATS, LOOK TO FACIAL RECOGNITION TECHNOLOGY FOR ADDITIONAL SECURITY

“We have seen customers use the image and video analysis capabilities of Amazon Rekognition in ways that materially benefit both society (e.g. preventing human trafficking, inhibiting child exploitation, reuniting missing children with their families, and building educational apps for children), and organizations (enhancing security through multi-factor authentication, finding images more easily, or preventing package theft),” said the spokesperson. “We remain excited about how image and video analysis can be a driver for good in the world, including in the public sector and law enforcement.”

This is not the first time that facial recognition has sparked controversy. Microsoft, for example, recently urged the government to regulate the technology, citing its potential for abuse.

In a blog post, Microsoft President Brad Smith warned that “without a thoughtful approach, public authorities may rely on flawed or biased technological approaches to decide who to track, investigate or even arrest for a crime.”

Earlier this year researchers from MIT and Stanford University reported gender and skin-type bias in commercial artificial-intelligence systems.

Fearing threats, however, some schools are looking to facial recognition technology for additional security.

Fox News’ Christopher Carbone and the Associated Press contributed to this article.

Follow James Rogers on Twitter @jamesjrogers