Fox News Flash top headlines for June 11

Fox News Flash top headlines for June 11 are here. Check out what's clicking on Foxnews.com

Researchers are showing off a creepy new software that uses machine learning to allow people to add, delete or change the words coming out of someone's mouth.

The work is the latest evidence that our ability to edit what gets said in videos and create so-called deepfakes is becoming easier, posing a potential problem for election integrity and the overall battle against online disinformation.

The researchers, who come from Stanford University, the Max Planck Institute for Informatics, Princeton University and Adobe Research, published a number of examples showing off the technology.

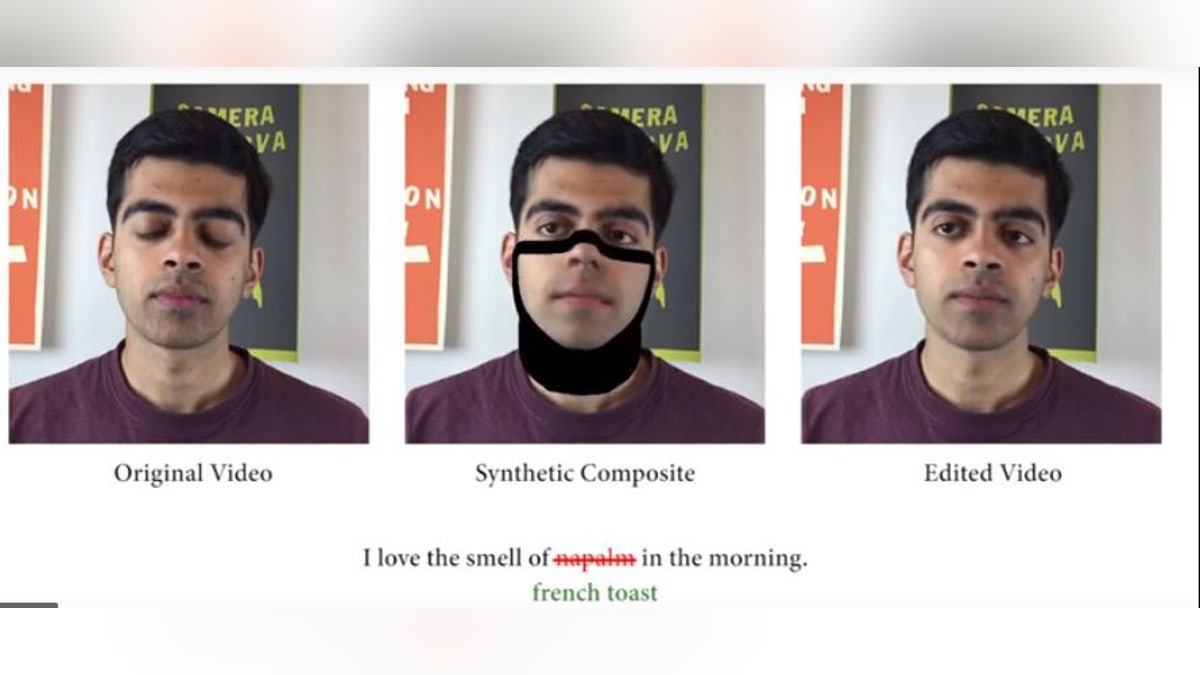

In one, they changed someone's statement from "I love the smell of napalm in the morning," which is a line from the film Apocalypse Now, to "I love the smell of French toast in the morning."

Researchers tested software that uses 3D models of a person's face to build new footage. (YouTube)

In order to produce video fakes, scientists combined several techniques. First, they scan a target video to isolate phonemes spoken by the person — those are the sounds that make up words, such as "oo" and "fuh." Then they match those words with the corresponding facial expressions that accompany each sound. Lastly, they create a 3D model of the lower half of the person's face using the target video.

There is a range of potentially harmful applications for this technology, particularly at a time when Silicon Valley firms like Facebook and Google are already dealing with a backlash over the spread of misinformation and fake news.

HOW THOUSANDS OF USERS COMBAT HATE SPEECH ON FACEBOOK

“Although methods for image and video manipulation are as old as the media themselves, the risks of abuse are heightened when applied to a mode of communication that is sometimes considered to be authoritative evidence of thoughts and intents," the researchers write in a blog post. "We acknowledge that bad actors might use such technologies to falsify personal statements and slander prominent individuals.”

They also suggest some remedies to mitigate the negative impact of video fakes, including the use of appropriate labeling and watermarking, although those context cues can be removed or edited out.

In the meantime, it's probably best to verify that what you're looking at is from a trustworthy source.