Unlocking your phone with facial recognition even when you have your glasses on

Face ID utilizes facial recognition technology to scan your face and verify your identity. When activated, the feature uses the front-facing camera; or selfie cam, to securely authenticate you are the owner of the iPhone.

Think twice before posting that selfie on Facebook; you might be added to a police database.

With all the excitement around AI, thanks to ChatGPT, many are cheering for this technology and loving its optimizing powers. Unfortunately, this onion has many layers; the deeper we go, the stinkier it gets.

We can list dozens of reasons to fear AI, such as it becoming more intelligent than humans and potentially lead to unexpected and uncontrollable outcomes, job displacement, weaponization, and so many others. However, there is already one issue that is rearing its ugly head. Privacy violations are growing and causing damage.

Clearview AI's software uses artificial intelligence algorithms to analyze images of faces and match them against a database of over 3 billion photos that have been scraped from various sources, including social media platforms like Facebook, Instagram, and Twitter, all without the users' permission. (Kurt Knutsson)

Unveiling the identity of the most notorious facial recognition company you've never heard of

The AI tech company Clearview AI, has made headlines for its misuse of consumer data. It provides facial recognition software to law enforcement agencies, private companies and other organizations. Their software uses artificial intelligence algorithms to analyze images of faces and match them against a database of over 3 billion photos that have been scraped from various sources, including social media platforms like Facebook, Instagram and Twitter, all without the users' permission. The company has already been fined millions of dollars in Europe and Australia for such privacy breaches.

AI CHATBOT CHATGPT CAN INFLUENCE HUMAN MORAL JUDGMENTS, STUDY SAYS

Why law enforcement is Clearview AI's biggest customer

Whenever the police have a photo of a suspect, they can compare it to your face, which many people find invasive. (Kurt Knutsson)

Critics of the company argue that the use of its software by police puts everyone into a "perpetual police lineup." Whenever the police have a photo of a suspect, they can compare it to your face, which many people find invasive.

Clearview AI's system allows law enforcement to upload a photo of a face and find matches in a database of billions of images that the company has collected. It then provides links to where matching images appear online. The system is known to be one of the most powerful and accurate facial recognition tools in the world.

Clearview AI has made headlines for its misuse of consumer data. It provides facial recognition software to law enforcement agencies, private companies and other organizations. (Kurt Knutsson)

Despite being banned from selling its services to most U.S. companies due to breaking privacy laws, Clearview AI has an exemption for the police. The company's CEO, Hoan Ton-That, says hundreds of police forces across the U.S. use its software.

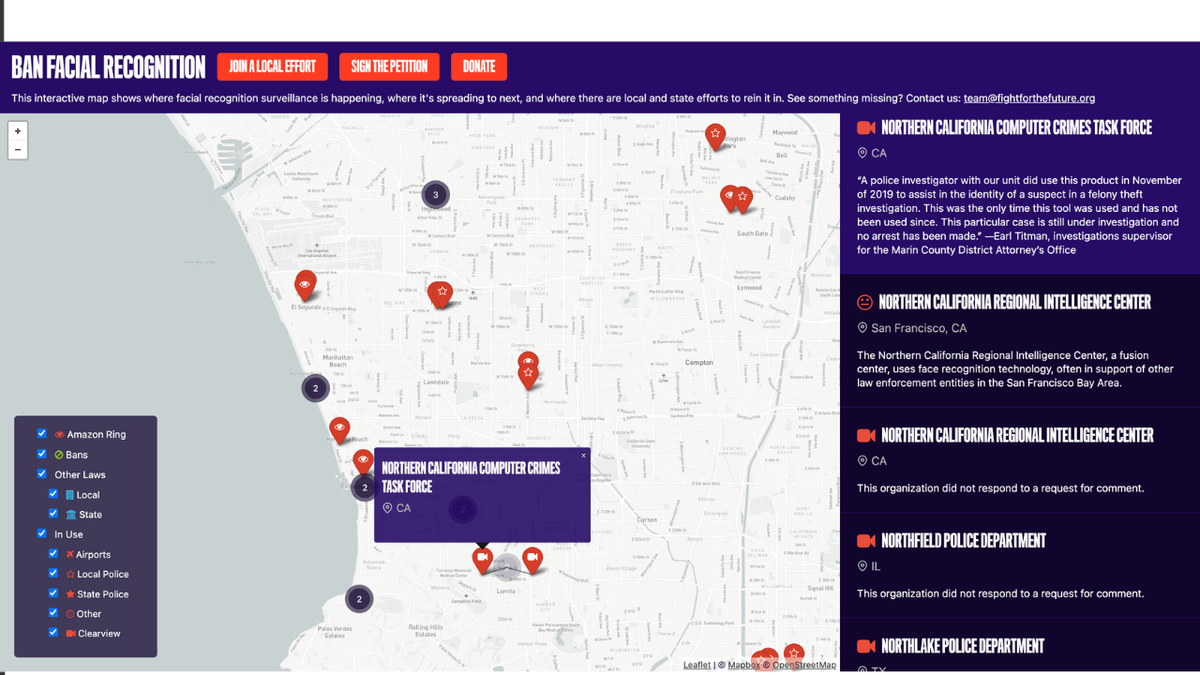

You can visit this website to see if police stations in your neighborhood are using this tech.

You can visit this website to see if police stations in your neighborhood are using this tech. (banfacialrecognition.com)

In most cases, however, police only utilize Clearview AI's facial recognition technology for serious or violent crimes, with the exception of the Miami Police, who have openly admitted to using the software for every type of crime.

HOW TO STOP FACIAL RECOGNITION CAMERAS FROM MONITORING YOUR EVERY MOVE

What are the dangers of using this facial ID software?

Clearview AI claims to have a near 100% accuracy rate, yet these figures are often based on mugshots. The system's accuracy depends on the quality of the images fed into it, which has serious negative ramifications. For instance, the algorithm can confuse two different individuals and provide a positive identification for law enforcement when in fact, the person in question is totally innocent, and the facial ID was wrong.

Clearview AI claims to have a near 100% accuracy rate, yet these figures are often based on mugshots. (Kurt Knutsson)

Civil rights campaigners want police forces that use Clearview to openly say when it is used and for its accuracy to be openly tested in court. They want the algorithm scrutinized by independent experts and are skeptical of the company's claims. This can help ensure those who are prosecuted thanks to this technology are, in fact, guilty and not victims of a flawed algorithm.

HOW TO STOP GOOGLE FROM ITS CREEPY WAY OF USING YOU FOR FACIAL RECOGNITION

Final Thoughts

Despite some cases where Clearview AI is proven to have worked for a defendant, such as in the case of Andrew Conlyn from Fort Myers, Florida, who had charges against him dropped after Clearview AI was used to find a crucial witness, critics believe the use of the software comes at too high a price for civil liberties and civil rights. While this technology may be used to help some people, its intrusive nature is terrifying.

CLICK HERE TO GET THE FOX NEWS APP

Would you support this technology if you knew it could help you win a case, or are its ramifications too dangerous? Let us know! We'd love to hear from you.

For more of my tips, subscribe to my free CyberGuy Report Newsletter by clicking the "Free newsletter" link at the top of my website.

Copyright 2023 CyberGuy.com. All rights reserved.