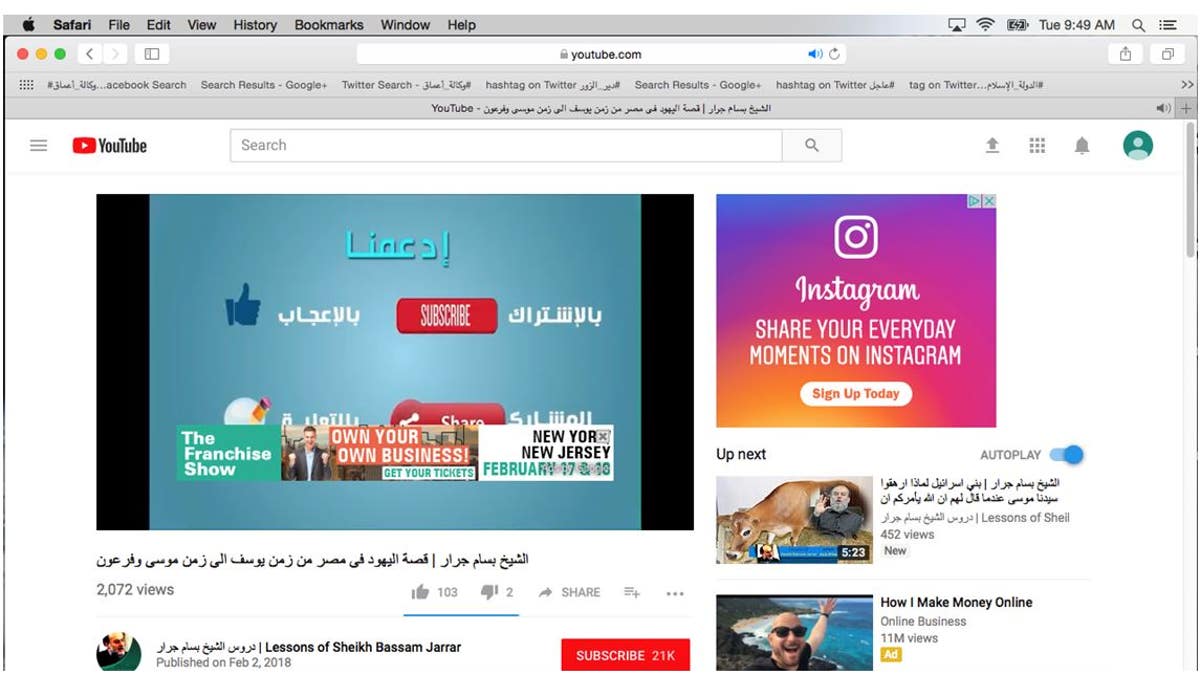

Screenshot showing advertisements on a YouTube channel that features videos of Sheikh Bassam Jarrar. Activists say Jarrar's videos are anti-Semitic, YouTube says they don't violate any policies. (YouTube/Courtesy Eric Feinberg/GIPEC)

YouTube appears to be doing damage control less than a week after a report revealed advertisements from hundreds of big-name brands were appearing next to videos with content ranging from Nazi and North Korean propaganda to pedophilia.

But when Fox News reached out to ask the company about ads running alongside a series of videos featuring a notoriously anti-Semitic scholar, one Fox reported on back on Valentine's Day, YouTube provided a defense of both the content of the videos in question, and the fact that people are making money off them.

Last week, a CNN investigation revealed advertisements for companies such as Amazon and Facebook, and even a 20th Century Fox film, were appearing on YouTube channels promoting Nazis, white nationalism, pedophilia and North Korean propaganda.

When an advertisement appears on a YouTube channel, that means the channel/video is "monetized," and that both YouTube and the creators of the video are making money off advertiser dollars.

A screenshot from February 2018 of Jarrar's YouTube channel. It has since gained thousands of subscribers. (Youtube/Courtesy Eric Feinberg/GIPEC)

Advertisers who do business with YouTube have a certain degree of control over where their ads appear on the platform. In a statement to CNN, a YouTube spokeswoman admitted that "even when videos meet our advertiser friendly guidelines, not all videos will be appropriate for all brands." She added that the company is "committed to working with our advertisers and getting this right."

YouTube CEO Susan Wojcicki reportedly held a conference call on April 26 with 30 of the company's advertiser and agency partners, and was expected to discuss the ongoing concerns from various brands that their advertisements are appearing in places that they shouldn't. A YouTube representative did not respond to a question about whether or not the call took place. But it was their defense of a YouTube channel brought to their attention by Fox News that’s upsetting some advocates.

YOUTUBE DEFENDS CHANNEL FEATURING CONTROVERSIAL CLERIC

Sheikh Bassam Jarrar is a Muslim cleric who was apparently expelled from Israel over his suspected membership in Hamas, a group designated as a terrorist organization in 1997 by the U.S. State Department. In previous lectures that have since been removed from YouTube, Jarrar has suggested that Jewish people are "apes" and "pigs," and even provided justifications for the Holocaust, according to a translation by the Middle East Media Research Institute (MEMRI).

On Valentine's Day this year, Fox News reported that a YouTube channel dedicated to Jarrar's lectures featured advertisements for companies like Instagram. The channel itself contains videos with titles such as "The condition of Muslim superiority over Israel," and "America 's recognition of Jerusalem as the capital of the Jews God hated their resurrection and discouraged them." Those titles are Google translations from the original Arabic.

When asked by Fox News about the appearance of advertisements on this channel, a YouTube spokesperson acknowledged previous Fox reporting on the issue, and said "YouTube is an open platform and we allow free expression as long as it does not violate our Community Guidelines."

According to their website, those guidelines include a ban on what YouTube calls "hateful content," which they define as “content that promotes violence against or has the primary purpose of inciting hatred against individuals or groups based on certain attributes, such as: race or ethnic origin [and] religion," among others.

In e-mails with Fox, YouTube added that videos flagged for being in violation of their guidelines are "immediately removed," and that advertisers are given "controls to exclude specific channels and various types of content." The channel featuring Jarrar's videos, they said, "does not violate community or advertiser friendly guidelines."

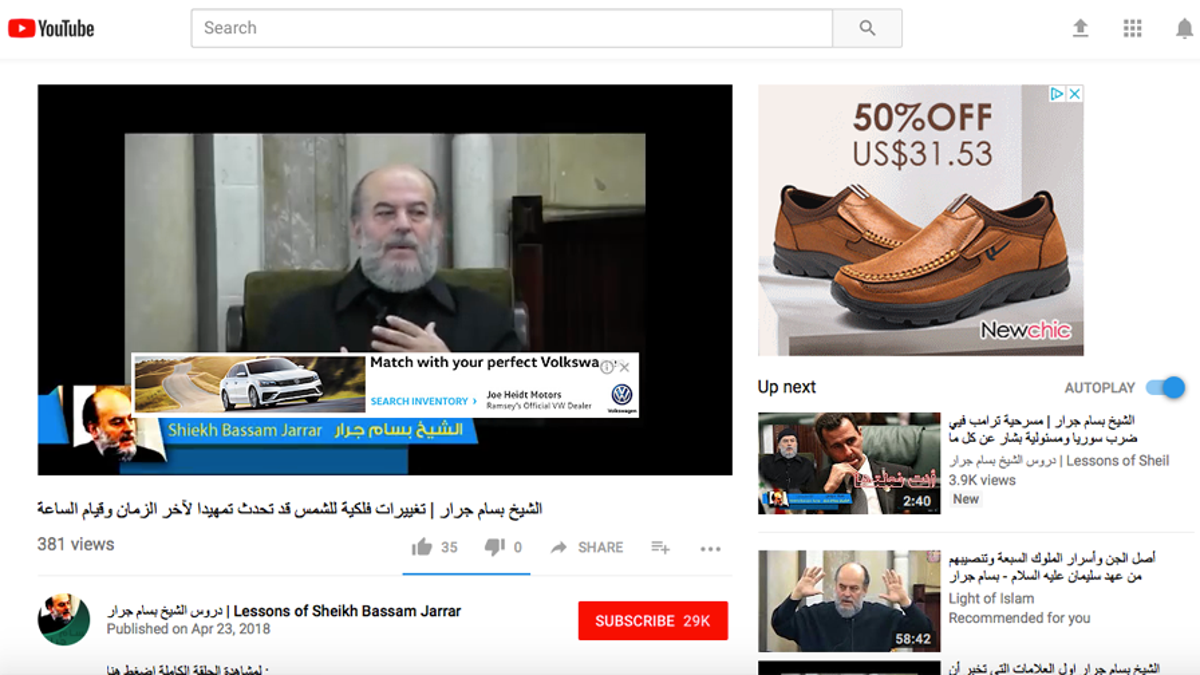

Screenshot showing more advertisements on a YouTube channel denounced by advocates. (Youtube/Courtesy Eric Feinberg/GIPEC)

Since Fox News’ February reporting, that same YouTube channel has gained nearly 10,000 new subscribers, and still features advertisements from companies like JustForMen, AncestryDNA, and a local Volkswagen dealer. When Volkswagen of America’s corporate communications office was asked about one of their dealer’s ads appearing on the channel, and YouTube’s subsequent defense, a representative made the company’s feelings clear.

“Volkswagen of America (VWoA) is committed to ensuring our brand’s advertising is associated with ethical content and strongly encourages Volkswagen dealers to similarly embrace this commitment,” a statement read. “VWoA does not purchase any advertising within YouTube user generated content and will not consider advertising on this platform until Google evolves their product to allow for more stringent content standards that guarantee brand safety and suitability.”

“IF THIS DOESN'T VIOLATE THEIR COMMUNITY GUIDELINES, NOTHING DOES"

It seems VWoA isn’t the only one in disagreement with YouTube's assessment of Jarrar’s lectures. "There is no question that this channel features grotesque anti-Semitic slurs which have been used to incite oppression and violence against Jews for centuries," says Roz Rothstein, co-founder and CEO of StandWithUs, an international Israel education organization.

Rothstein, who is the daughter of Holocaust survivors, added "this hate has no place on YouTube or anywhere else, and it is outrageous that the company is refusing to take action. If this doesn't violate their community guidelines, nothing does."

"This hate has no place on YouTube or anywhere else, and it is outrageous that the company is refusing to take action. If this doesn't violate their community guidelines, nothing does."

Steven Stalinsky, MEMRI’s executive director, also disagreed with YouTube's defense of the channel featuring Jarrar's lectures, and added that this is part of what he calls an ongoing problem with YouTube.

The media arm of Hezbollah, which has also been designated as a terrorist organization by the State Department, has an official YouTube channel with several monetized videos, Stalinsky pointed out. "[YouTube is] definitely good at removing ISIS content, but when it comes to some of the other content they're not as good, for sure," he added. “I don't know how anyone could say that this isn't anti-Semitic.”

The Anti-Defamation League, a group that is apparently working with YouTube to change some of its policies, pointed to a statement issued last week that also suggested ads appearing alongside offensive content is “an ongoing problem.” Brittan Heller, director of ADL’s Center for Technology and Society, said the ADL is now calling “on YouTube and other platforms to better explain to the public why this is a recurring issue and how their technology developed to the point where a shoe ad appearing on a Nazi video is a regular occurrence."

“ALWAYS BEEN A SMALL PROBLEM”

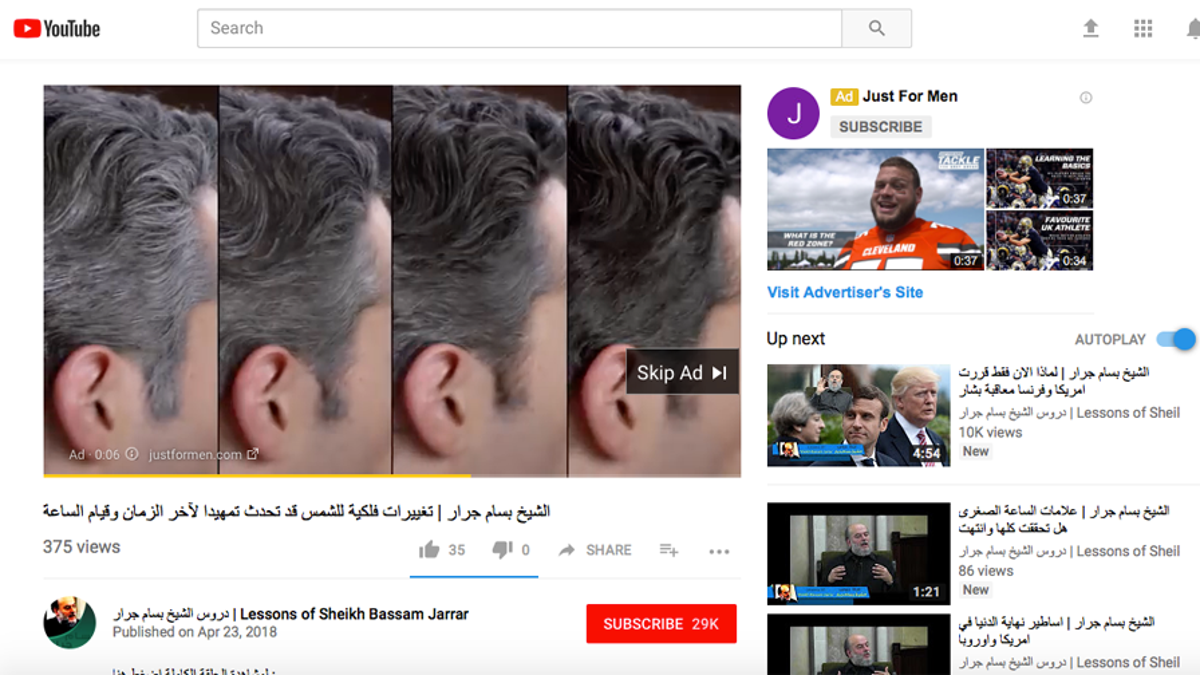

YouTube/Courtesy Eric Feinberg/GIPEC (More advertisements on a YouTube channel denounced by advocates.)

In March 2017, YouTube experienced an exodus of major advertisers after similar reports of ads appearing alongside questionable content. Within a week, Fox reported on a series of ISIS recruiting videos that cropped up in the wake of a terror attack in London. Shortly after, a top executive for Google (YouTube's parent company) was quoted as saying that this kind of thing "has always been a small problem," and attributed much of the uproar to increased public awareness.

That, of course, was before an analogous situation unfolded just a few months later in November 2017, and again last month when 20th Century Fox joined a handful of companies who asked to have their advertisements pulled from the YouTube channel for Infowars, known for trafficking in conspiracy theories.

The Infowars dustup came after a December 2017 statement from YouTube’s Wojciki, who admitted in a company blog post that "some bad actors are exploiting our openness to mislead, manipulate, harass or even harm." She also promised to boost to 10,000 the number of people "working to address content that might violate our policies" in 2018.

Eric Feinberg, a founding partner of deep web analysis company GIPEC, says he thinks he knows why Google/YouTube has failed to fix a problem that has “always” been on their radar. "These companies, they have no moral responsibility to anything except the almighty dollar," Feinberg argued. He says the company's reliance on artificial intelligence to remove videos is more of a cost-cutting measure than anything else.

Earlier this month, Google revealed in another blog post that from the period of October-December 2017, more than 8 million objectionable videos were removed from the platform, with some 6.7 million flagged "by machines, rather than humans." The savings gained from that automation, Feinberg says, come with a dangerous price.

"They are playing fast and loose with our security, and these videos are being used to radicalize and incite," according to Feinberg. "These are multi-billion dollar companies and they want to rely on free labor. That's like getting on an airplane and having your fellow passenger doing your TSA checks," he added.