Twitter executive 'troubled' by reports platform was misused

In congressional testimony, acting general counsel Sean Edgett says Twitter is addressing challenge of 'state-sponsored manipulation of elections.'

There was the ISIS attack on a chemical plant in southern Louisiana last September. Two months later, an outbreak of the deadly Ebola virus occurred in Atlanta on the same day a video began circulating on social media of an unarmed black woman being shot to death by police in the Georgia city.

What all these shocking and disparate stories have in common are two things: they are not true and they all originated from a group of Russian cyber trolls working out of a non-descript office building in St. Petersburg.

In addition, throughout last year’s presidential election season, dozens of stories circulated on Twitter, Facebook and other social media attacking Democratic candidate Hillary Clinton about everything from supposedly having poor mental health to allegedly fostering secret ties with Islamic extremists.

With top executives from Facebook, Twitter and Google testifying Tuesday before a Senate Judiciary subcommittee on Russia’s attempts to influence U.S. elections and sow discord across the country via social media, many questions remain about how these Russian trolls operate, the veracity of the so-called news they are spreading and what tech giants in Silicon Valley are doing to combat this scourge.

“We’re pretty sure Russia is behind this given that we’ve seen the building they use, know that they’ve put hundreds of millions of dollars into this effort and that they have about 200 employees working on this,” Philip Howard, a professor of internet studies at the University of Oxford and research director of the Oxford Internet Institute, told Fox News. “The problem combatting them is different with each social media platform.”

On the world’s most popular social media site, Facebook, Russian trolls use fake names and backgrounds paired with stolen photos to pose as American citizens and spread either false news stories or hacked information.

In one instance, an account under the name of Melvin Reddick of Harrisburg, Pa. last June posted a link to a website called DCLeaks, which displayed material stolen from a number of prominent American political figures. While the information posted on the site appeared to be true, Reddick was an apparition – with no records in Pennsylvania appearing under his name and his photos taken from an unsuspecting Brazilian.

While trolls in Russia have also used individual accounts on Twitter to disseminate false or incendiary news, the more common practice implemented on that platform is so-called bot farming, where a series of up to hundreds and –at times – thousands of automated accounts will send out identical messages seconds apart and in the exact alphabetical order of their made-up names.

On Election Day last year, a group of bots on Twitter blasted out the hashtag #WarAgainstDemocrats more than 1,700 times.

Experts say that while these trolls may use various methods to try and disguise their Russian identities, such as changing their IP addresses, they are easily tracked by their frequent screw-ups (some accidently put their location setting on in Twitter, use credit cards linked to the Russian government or write their posts in Cyrillic).

Preventing them from operating, however, is a different story.

Groups like Hoffman’s Oxford Internet Institute say that they can monitor questionable accounts and alert tech companies, but to really make progress – and to openly prove that Russia is behind these attacks – Facebook and Twitter need to be more transparent.

“The only way to see if these accounts are actually Russian is for Facebook and Twitter to be more open and tell us,” Hoffman said. “That is why these testimonies are so important.”

The testimony from Facebook, obtained before the company went before the Senate, revealed that posts generated by Russia's Internet Research Agency potentially reached as many as 126 million users between January 2015 and August 2017.

Twitter was expected to tell the same subcommittee that it has uncovered and shut down 2,752 accounts linked to the same group, Russia's Internet Research Agency, which is known for promoting pro-Russian government positions.

“Twitter believes that any activity of that kind—regardless of magnitude—is unacceptable, and we agree that we must do better to prevent it,” a source familiar with Twitter’s testimony, said in an email to Fox News. “State-sanctioned manipulation of elections by sophisticated foreign actors is a new challenge for us—and one that we are determined to meet.”

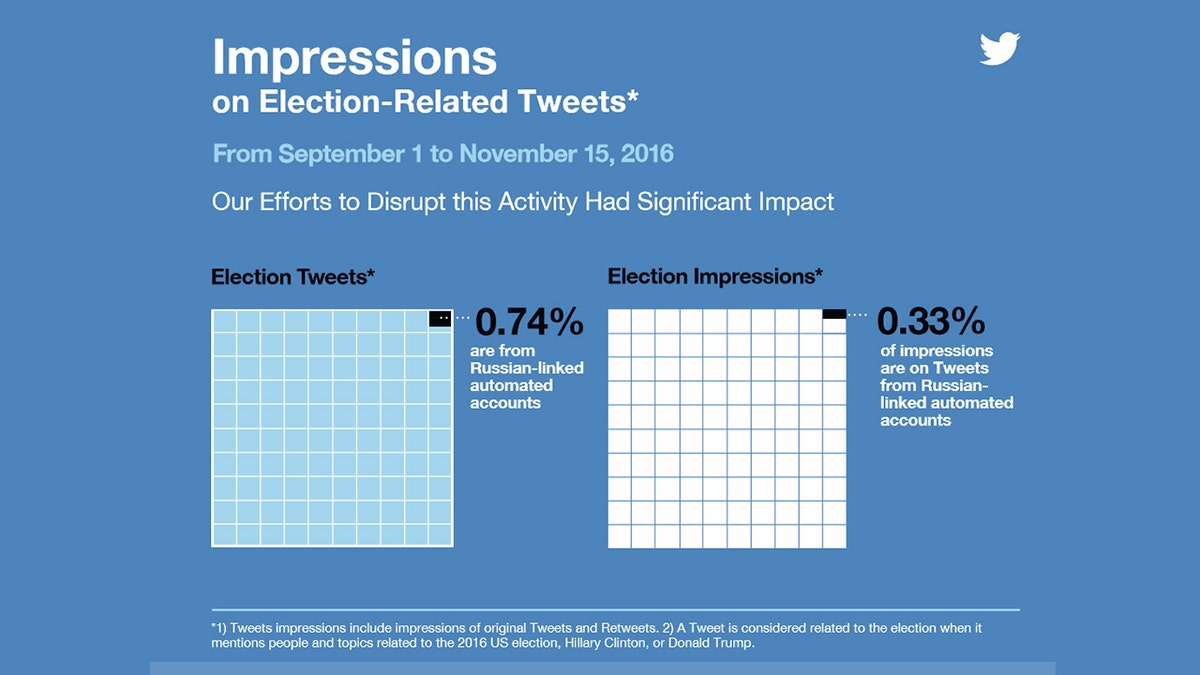

(Twitter)

Despite the more than 2,700 accounts linked to Russia being nearly 14 times larger than the number of accounts Twitter handed over to congressional committees three weeks ago, the company the number of accounts linked to Russia and that were Tweeting election-related content was “small in comparison to the total number of accounts,” on the social media site, the person familiar with the testimony said, adding they did not rig the election in favor of President Trump.

South Carolina Sen. Lindsey Graham, the Republican chairman of the Judiciary subcommittee holding the hearing, said in a statement that manipulation of social media by terrorist organizations and foreign governments is "one of the greatest challenges to American democracy" and he wants to make sure the companies are doing everything possible to combat it.

"Clearly, to date, their efforts have been unsuccessful," Graham said.

The Associated Press contributed to this report.