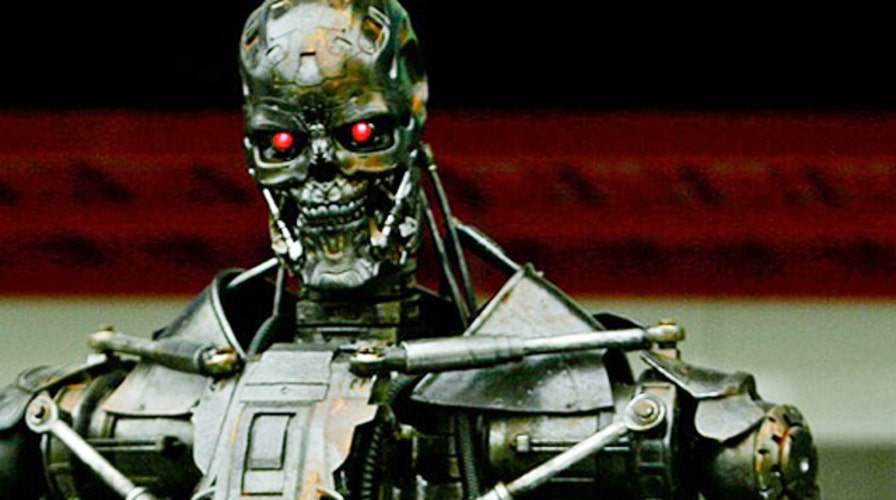

Tech experts warn of 'killer robot' arms race, call for ban

In an open letter, scientists describe 'autonomous weapons' as the 'Kalashnikovs of tomorrow'

Imagine a future where artificially intelligent (AI) military robots decide who to kill, when and why -- entirely on their own, without human intervention. Stephen Hawking, Elon Musk and Steve Wozniak have -- and, together, they’re calling for humanity to stop the terrifying possibility before it starts.

The world-renowned intellectuals today joined some 1,000 thought leaders and AI and robotics researchers in warning that autonomous weapons could tip off the next global arms race. That is, if we don’t stop the killer machines first.

Related: Why We Shouldn't Fear Artificial Intelligence

Their stark message appears in an open letter published online today by the Boston-based Future of Life Institute (FLI). The missive -- also notably signed by Google DeepMind chief executive Demis Hassabis, Google research director Peter Norvig and cognitive scientist Noam Chomsky -- calls for a ban on “offensive autonomous weapons.” It will be formally presented tomorrow at the opening of the International Joint Conference on Artificial Intelligence in Buenos Aires, Argentina.

The letter warns: “If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow.”

How soon is ”tomorrow,” according to the leading minds behind the grim, if not alarming, call to action? We’re talking years. "Artificial Intelligence (AI) technology has reached a point where the deployment of such systems is — practically if not legally — feasible within years, not decades," the letter reads.

Related: Human + A.I. = Your Business Future

Its authors cite the use of armed assassin quadcopters, “that can search for an eliminate people meeting certain pre-defined criteria,” as an example of a type of technology that can “select and engage targets without human intervention.” (You might recall that, just last week, a consumer drone jerry-rigged with a handgun set the Internet ablaze, though it appears to have been controlled by a lowly human.)

The four-paragraph letter isn’t all doom and gloom. It briefly acknowledges that AI war machines can benefit humans by replacing them in combat, potentially reducing casualties. On the flipside, however, they can be disastrous, potentially “lowering the threshold for going to battle,” fueling terrorism and destabilizing nations.

Related: 5 Things You Don't Know About Elon Musk

This isn’t the first time Hawking and Musk have publicly called for caution regarding the applications of AI. Last January, they both signed a similar FIL document. It too expressed the need to avert the dystopic potential pitfalls of AI, while urging the exploration of its use toward the “eradication of disease and poverty.”

Wozniak signed the same earlier letter as well, though his views on AI seemed to have lightened up since. The Apple co-founder recently said he’s gotten over his fear that we’ll be replaced by artificially intelligent bots. When they do eventually take over, he thinks we’ll make great pets for them.

Related: Elon Musk's Money Is Being Used to Stop AI From Obliterating Humans