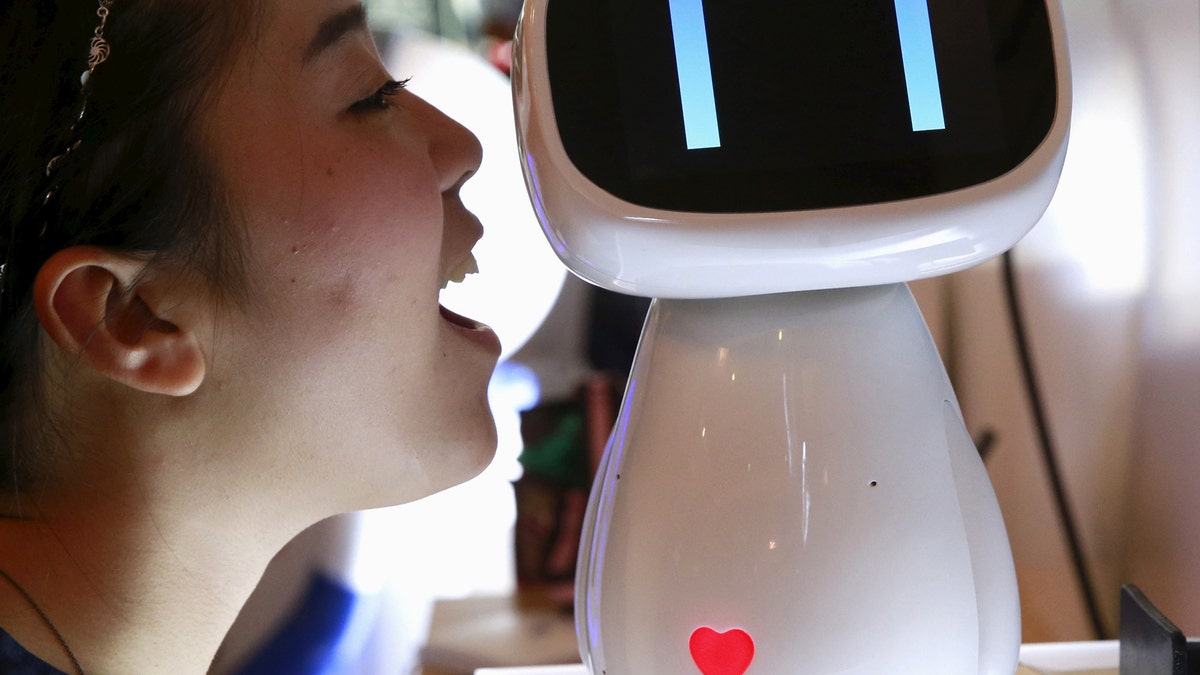

File photo: A visitor speaks to Baidu's robot Xiaodu at the 2015 Baidu World Conference in Beijing, China, September 8, 2015. (REUTERS/Kim Kyung-Hoon)

As evidenced by Apples rumored plans to replace Touch ID with facial recognition technology for the iPhone 8, the ability of computers to seamlessly recognize faces is pretty darn impressive these days. The technology is not infallible, however, and there are still things capable of tripping it up. One example? Hands covering faces, which represents a significant challenge, due to how often a particularly animated hand gesture accidentally obscures a speaker's face. Fortunately, computer science researchers are here to help.

What researchers from the University of Central Florida and Carnegie Mellon University have developed is a method of dealing with the so-called facial occlusion problem. Called Hand2Face (which admittedly sounds a little bit like that early 2000s talk to the hand meme), they've developed technology that can help improve facial recognition technology for a variety of applications -- ranging from security to making machines better understand our emotions.

Recognizing and working with facial occlusions are among the challenging problems in computer vision, Behnaz Nojavanasghari, one of the researchers, told Digital Trends. Hand-over-face occlusions are particularly challenging as hands and faces have similar colors and textures, and there are a wide variety of hand-over-face occlusions and gestures that can happen. To build accurate and generalizable frameworks, our models need to see large and diverse samples in the training phase. Collecting and annotating large corpus of data is time demanding, and limits many to work with smaller volumes of data, which can result in building models that do not generalize well.

Arxiv

A big part of the team's research involves building a bigger archive of hand-obscured face images for machines to learn from. This meant creating a system for identifying hands in images in the same way that present facial recognition systems identify eyes, noses, or mouths. Larger data sets can then be built up by getting the computer to automatically composite new images by taking hands from one picture and pasting them onto another. To make the synthesized images appear genuine, the computer color-corrects, scales, and orients the hands to emulate realistic images.

Thats not all, though: the method for identifying hand gestures could also be used, alongside facial expressions, to identify emotions. In a majority of frameworks, facial occlusions are treated as noise and are discarded from analysis, Nojavanasghari said. However, these occlusions can convey meaningful information regarding a persons affective state and should be used as an additional cue.

As the need for machines to be able to read our emotions grows (consider robot caregivers, teachers, or even just smarter AI assistants like Alexa and Siri), solving problems like this is only going to become more important.You can read an academic paper describing the work here.