The Rbutr tool lets people easily find counter-arguments to pages they are viewing. (FoxNews.com)

Have you heard about the dentist who pulled all of her ex-boyfriend's teeth out in a fit of jealous rage? What about the "fact" that more women are the victims of domestic abuse on Super Bowl Sunday than on any other day of the year? How about the story about terrorists who have stolen thousands of dollars worth of UPS uniforms? (Like delivery people don't have enough problems already.)

Need I go on?

All these stories are false, but thanks in large part to the wonderful World Wide Web, they live on, ricocheting around cyberspace. Unfortunately, some new research points out how difficult it is to correct mistaken ideas and stories online.

One would think that this fount of digital knowledge, the greatest democratizing communications tool the world has ever seen, the ultimate solution to every education problem, and the medium that was supposed to wipe out ignorance forever would be the best way to correct inaccurate beliefs. Think again. Even the most cutting-edge, immediate Web technology may not be enough to correct misunderstandings. Indeed, immediacy may work against the truth.

'It should be better to correct a rumor at the source before it ever spreads.'

In experiments designed to determine the best way to correct misinformation and inaccurate beliefs online, researchers at Ohio State University found that instantly offering corrected information may not help as much as we'd like.

"It should be better to correct a rumor at the source before it ever spreads," R. Kelly Garrett, lead author of the study and assistant professor of communication at Ohio State University, told FoxNews.com. "But instead, the attempt to correct it before it spreads seems to trigger a defensive response."

In other words, when presented instantly with the corrected information, say that the statistics are fake, we may refuse to believe it, especially if we were predisposed to believe the false rumor in the first place. So if you're, say, already afraid of dentists and think they are all sadistic fiends, you probably still believe there's a faithless, toothless ex-boyfriend out there.

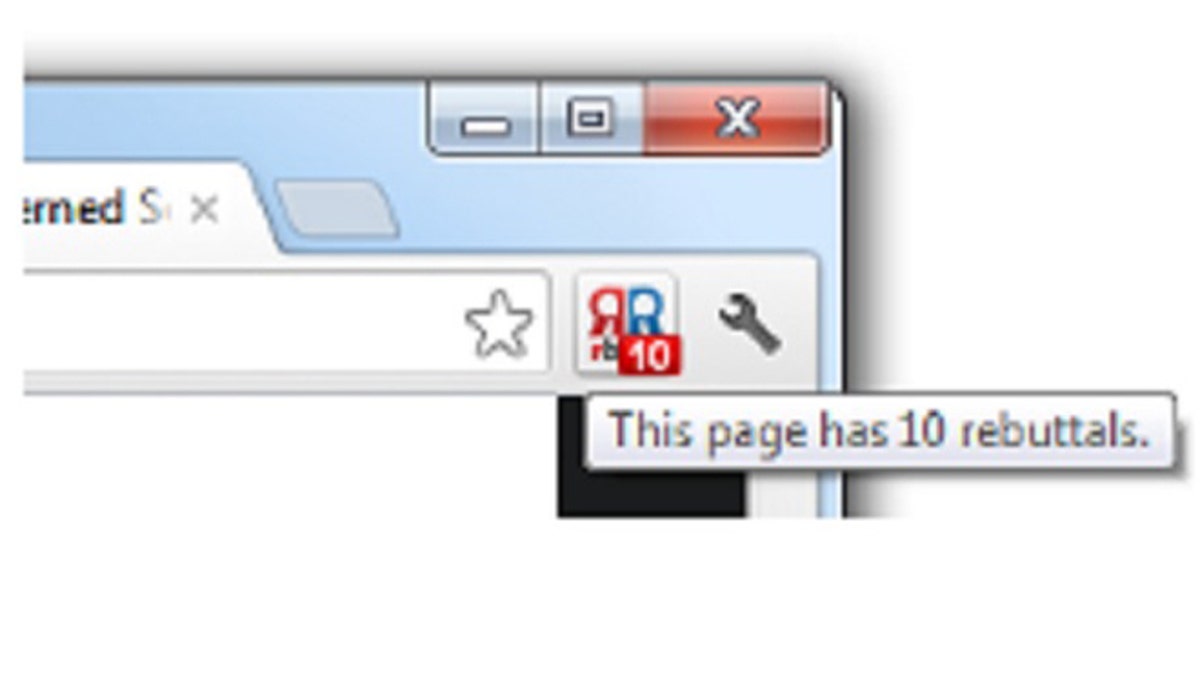

The point of the of the study was actually to determine if Web-based programs that present fact-checked information and corrections automatically would improve the accuracy of a surfer's knowledge. Intel had one such research project called Dispute Finder, a fascinating browser extension that would highlight information and let you know that the item was either incorrect or at least contested by contradictory information. That program has ended, but it was an interesting tool for reporters, researchers, and anyone curious about other points of view. There are two other similar programs now being tested, Hypothes.is and Rbutr, both of which are in the early experimental stage.

Ideally, such programs would all but eliminate the "you can't trust anything on the Web" sentiment. In theory, every page online would be fact-checked, footnoted, and referenced so that a reader would know exactly where the information came from (this author is being paid by a large conglomerate!) and whether or not it was correct (the CDC says that touching aluminum will not give you Alzheimer's).

Imagine, you would actually be able to trust what you read online!

Unfortunately, we're talking about human beings, not binary code. And changing people's minds isn't simply a matter of ones and zeros, as Garrett and his co-author Brian Weeks discovered.

Some participants in the study did in fact adjust their beliefs to form more correct views when information was instantly highlighted, pointing out errors. However, a disturbing number of people rejected the corrections. While precisely quantifying this trend wasn't possible in the research (how do you put a number on wide-ranging degrees of belief, confidence, or skepticism?), the trend itself was "scary," says Garrett. In fact, they rejected the corrections even though they were told it was from a reputable, impartial source.

On the positive side, people were more receptive to the corrections when they were presented after some delay. When corrected information was presented 3 minutes after reading a story (a distracting task was introduced during that time), participants--which included ethnically and politically diverse members--were moderately more likely to adjust their views and form more accurate beliefs.

One could nevertheless see the results as showing that the battle for truth and against misinformation is a Sisyphean war against a rising tide of blogs and babble online. If people won't correct their beliefs and adjust their understanding when presented with the truth, how can we possibly ever correct spurious Twitter tails or wacky Wikipedia entries or Facebook falsehoods?

Garrett suggests that the reason some people may not see instantaneous corrections as credible online is because it's confrontational.

"It's like you're getting called out," he says. "It's saying, no, you're wrong."

This may account for why corrections, separated in time from the initial erroneous report are more effective. We're not as immediately vested in the rumor, so we're more likely to accept the new, more credible information. We are, in short, less defensive.

Also, corrections that appear from the same source as the original story are more likely to be accepted. So a newspaper correction about a story it previously ran is more credible; the news source itself is saying that it made a mistake--not you.

Still, the research is disturbing and should be a cautionary tale for anyone online. Think of a rumor or personal joke made on a social networking site that could malign your chances of getting a job. Then think of how difficult it would be to get the truth out so that employers don't think you, say, hate babies and despise fuzzy kittens.

Garrett doesn't think the situation is hopeless. He points out that some corrections are better than none. Some people did form more accurate beliefs based on amended information.

"We shouldn't throw up our hands entirely," says Garrett. "My next task will be to test for something that really works."

Follow John R. Quain on Twitter @jqontech or find more tech coverage at J-Q.com.